Abstract

Detecting sarcasm effectively requires a nuanced understanding of context, including vocal tones and facial expressions. The progression towards multimodal computational methods in sarcasm detection, however, faces challenges due to the scarcity of data. To address this, we present AMuSeD (Attentive deep neural network for MUltimodal Sarcasm dEtection incorporating bi-modal Data augmentation). This approach utilizes the Multimodal Sarcasm Detection Dataset (MUStARD) and introduces a two-phase bimodal data augmentation strategy. The first phase involves generating varied text samples through Back Translation from several secondary languages. The second phase involves the refinement of a FastSpeech 2-based speech synthesis system, tailored specifically for sarcasm to retain sarcastic intonations. Alongside a cloud-based Text-to-Speech (TTS) service, this Fine-tuned FastSpeech 2 system produces corresponding audio for the text augmentations. We also investigate various attention mechanisms for effectively merging text and audio data, finding self-attention to be the most efficient for bimodal integration. Our experiments reveal that this combined augmentation and attention approach achieves a significant F1-score of 81.0% in text-audio modalities, surpassing even models that use three modalities from the MUStARD dataset.

Type

Publication

arXiv:2412.10103 [cs.CL]

This paper presents AMuSeD, a novel approach to multimodal sarcasm detection that addresses the challenge of data scarcity through innovative bimodal data augmentation techniques. By combining Back Translation for text augmentation with advanced speech synthesis methods for audio generation, our model effectively captures the nuanced interplay between textual and auditory elements in sarcastic expressions.

Our research demonstrates that self-attention mechanisms with skip connections provide optimal feature fusion for sarcasm detection, and that Fine-tuned FastSpeech 2 models are more effective at preserving sarcastic prosody compared to other speech synthesizers. The results show that our bimodal approach achieves state-of-the-art performance, even surpassing models that utilize three modalities from the MUStARD dataset.

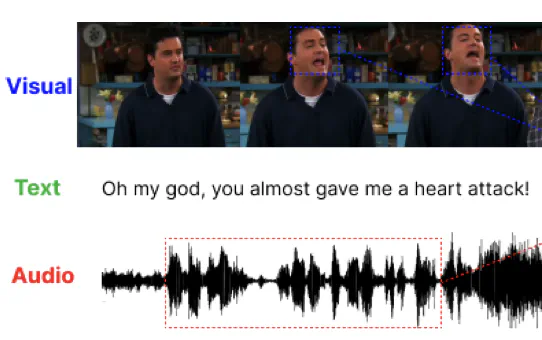

Multimodal sarcasm detection using visual, textual, and audio cues

Multimodal sarcasm detection using visual, textual, and audio cues