Intra-modal Relation and Emotional Incongruity Learning using Graph Attention Networks for Multimodal Sarcasm Detection

Apr 11, 2025·,,, ,,·

1 min read

,,·

1 min read

Devraj Raghuvanshi

Xiyuan Gao

Zhu Li

Shubhi Bansal

Matt Coler

Nagendra Kumar

Shekhar Nayak

ICASSP 2025 Poster: Multimodal Sarcasm Detection

ICASSP 2025 Poster: Multimodal Sarcasm DetectionAbstract

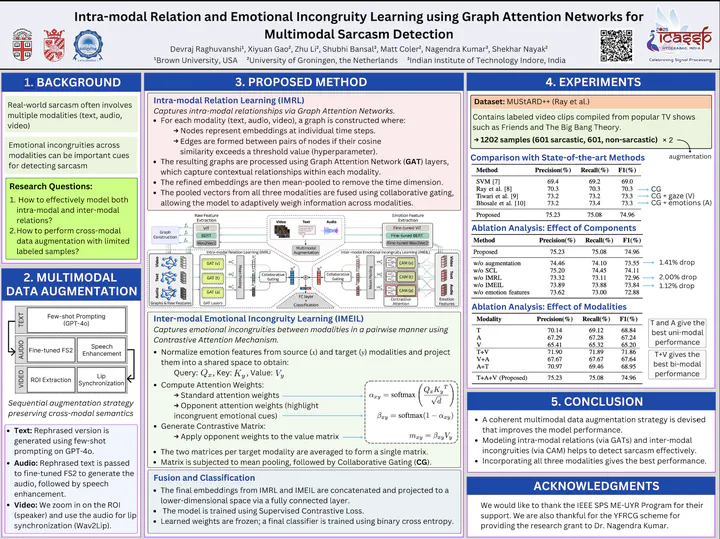

Real-world sarcasm often involves multiple modalities (text, audio, video). Emotional incongruity across modalities can be important cues for detecting sarcasm. This work presents a novel approach to multimodal sarcasm detection that leverages both intra-modal relations and inter-modal emotional incongruities. We introduce a Graph Attention Network-based model for capturing relationships within modalities and a Contrastive Attention Mechanism for identifying emotional incongruities between modalities. Our approach also includes a coherent multimodal data augmentation strategy to address limited labeled examples. Experiments on the MUStARD++ dataset demonstrate that our method outperforms state-of-the-art approaches, achieving significant improvements in precision, recall, and F1 scores.

Type

Publication

In 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

This paper presents a novel approach to multimodal sarcasm detection that combines Graph Attention Networks for intra-modal relation learning with a Contrastive Attention Mechanism for identifying emotional incongruities between modalities. Our model achieved state-of-the-art performance on the MUStARD++ dataset, with particularly strong results when integrating all three modalities (text, audio, and visual).

Key contributions include:

- A coherent multimodal data augmentation strategy addressing limited labeled examples

- Effective modeling of intra-modal relations via Graph Attention Networks

- Inter-modal emotional incongruity detection through a specialized Contrastive Attention Mechanism

- Comprehensive ablation studies demonstrating the value of each component and modality combination